222 reads

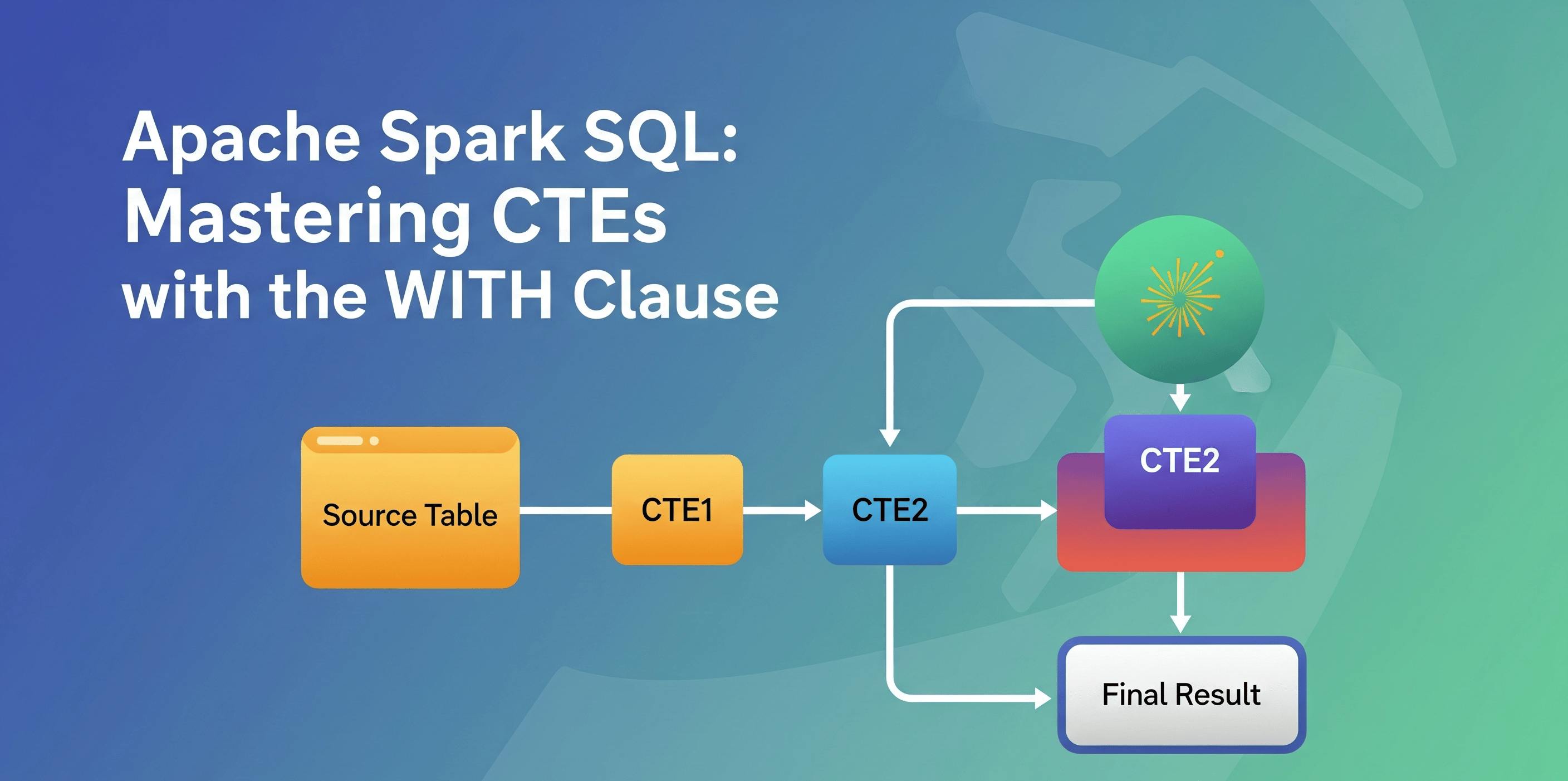

How to Write Complex Queries in Apache Spark SQL Using CTE (WITH Clause)

by

June 29th, 2025

Audio Presented by

I am a Senior Software Engineer with over 12 years of experience in the IT industry

Story's Credibility

About Author

I am a Senior Software Engineer with over 12 years of experience in the IT industry